The branding & theme was named by my partner, Annie 🙂

I actually started this specific post as an addendum to my previous post; but realised that there’s enough in here that I want to talk about on both a personal and technical level that it should warrant its own entry.

Why “Minty Charmander”?

Well, Annie thought the colour scheme reminded her of a Charmander, and combined with the light green highlights – “Mint” 🙂

The colour scheme uses a number of my favourite colours in a limited palette – purposefully, as I recall from some old design course literally a couple decades ago now, that in UX a small number of colours that can be interchanged and not conflict with each other, was better than a large dynamic swatch of colour for getting information across.

I am using the ol’ trusty Bootstrap framework for the UI and layout of everything. I don’t have any real special rationale for using Bootstrap – it’s just what I’m most familiar with; I think as I ease myself back into coding from a long break, it’s nice to just crawl before I can walk, before I can run 😅

Planetscale?!

Laziness and the idea that I needed a stable service to run a DB for my little projects convinced me to continue with Planetscale – yes, it costs $47 USD a month, but it’s more stable, and more nicely managed than I could ever do with a random self hosted solution.

I decided to continue paying it for the time being, pending further efforts to make things self hosted down the track, but for the time being – it’s nice to have a DB that is:

- highly available;

- able to spawn itself into a main, and dev branch

I could probably implement this without a paid system – but I feel like the DB service works as a backend for multiple systems (as it would if I were to self-host) – and that’s a single point of failure that I couldn’t upkeep like a service that is designed to stay online professionally.

End of the day, it’s actually pretty easy to justify the cost of this database for myself; and I’ve spent more on dumber stuff in the past. At least this is a sensible subscription 😜

So, what’s next?

Well, the branding is mostly done, but there’s a few missing things like Search results statistics, and category browsing callouts, to name a couple of things. I’ll be taking my time fixing everything, and eventually hope to start using this blog a lot more to diary more things that I get up to on a more personal level, as opposed to just dumping whatever pseudo-technical stuff comes across my mind!

As my Homelab and private discord community has grown, I’ve needed to roll out more than just websites, but also web applications, and differently ported things that need to be proxied back to a domain or subdomain address. The old setup was horrible… so I set about fixing it once and for all in November and December, 2022.

The Problem…

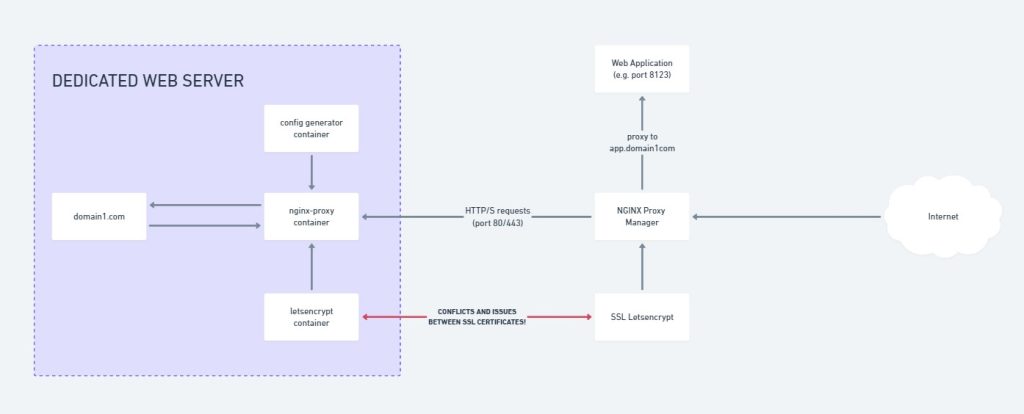

I’m stuck with something that looks like this:

This essentially means for each website I deploy, I’d need to essentially double proxy myself; and it was honestly a little bit confusing to work with SSL certificates.

How did it come to this?

One of the legacies of my time at Hostopia was building a Docker based local test environment that was portable and rapidly deployable; using an nginx-proxy container, with apache containers for the websites behind the proxy container.

The beauty of this setup was that I could quickly roll out a website as needed anywhere with the magic of Docker. And for my initial purposes, that was fine.

The problem arises when I try to roll out secondary services, like GitLab, Minecraft Maps, Game Server UIs etc. which are all related to various non standard HTTP(S) ports, but need to be reverse proxied to subdomains, etc. (an example being https://map.northrealm.info — which is a Minecraft Server Map that runs on port 8123). I’d have to have ALL THOSE RESOURCES on a single server. Or each additional server could be a double proxy to account for extra servers. This isn’t very efficient.

And secondly the bigger problem – was organising and renewing SSL certificates, it was a hassle tracking and renewing or making new certificates as needed as it was being double routed first through Nginx Proxy Manager, then secondarily on the local docker container host the app/site was located on!

So as for why it was configured like this? A mix of speed, and laziness in doing things “The right way™”. What was supposed to be quick and easy eventually just became a hassle that wasn’t working properly.

The Solution

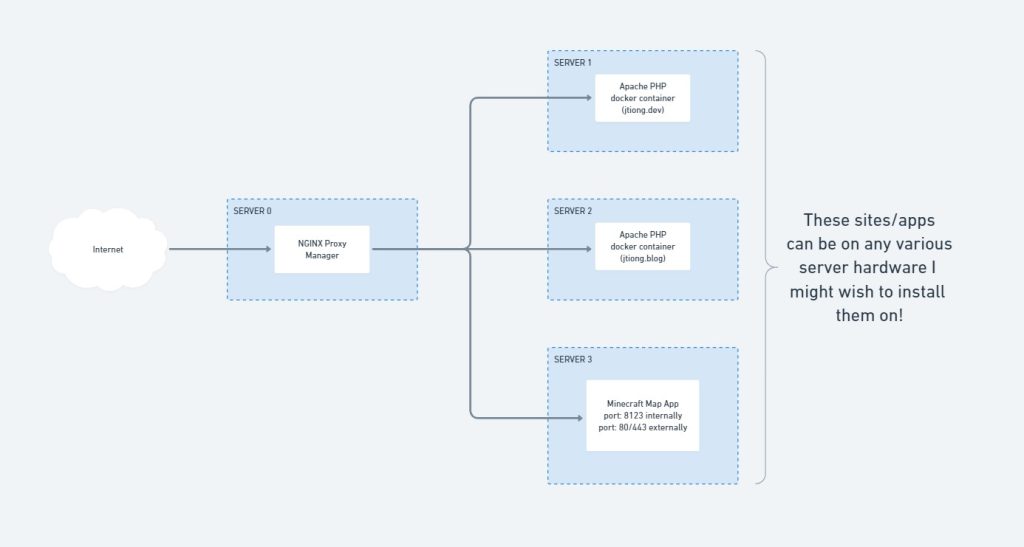

My services infrastructure now looks like this:

It might not seem like much – but it’s now server hardware agnostic, and I don’t need to install a separate cluster of containers to manage locally any sites or apps per server.

NginxProxyManager (NPM) now acts as that cluster of infrastructure containers that span the full home network as opposed to being tied down to one host. Custom nginx configurations are created “per host” in the app, and they handle how pages and content are served for sites, or direct traffic specifically for a given application.

Much better I say! 😀

*Facepalm*

There are plenty of ways to skin a cat; and this is definitely better than the original setup! It’s also not a perfect solution, but this blog post wasn’t written in an attempt to find absolute perfection (I believe it’s something to strive for, you can’t achieve it unless you’re a divine power) – and it’s more to document the journey of my ignominy and learnings as I go about running a homelab that actually gets some use 🙂

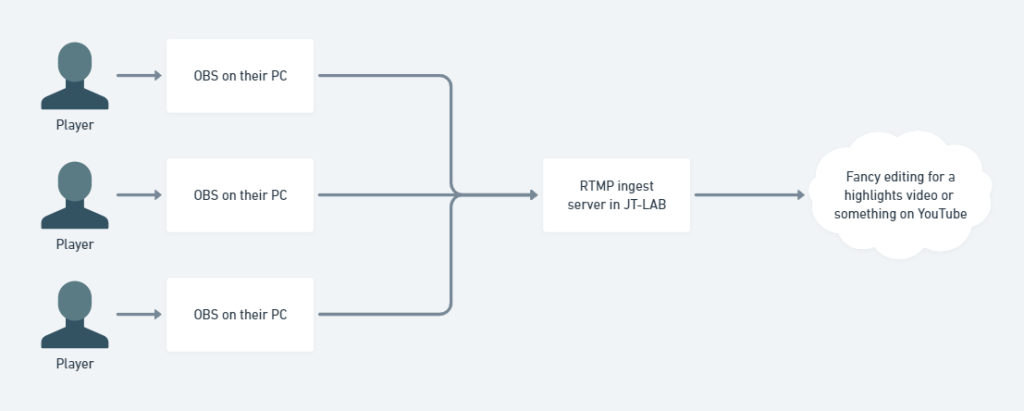

So, my friends are big proponents of using https://ossrs.io (Open Source Simple Realtime Server) – which (in the way I’m intending to use it) will ingest RTMP streams from various OBS clients (my friends) and save their footage in a folder, which I can then use to edit various highlight reels from our gaming nights together.

The benefits are:

- Storage – it’s saved on my end, so friends don’t need to worry about storage

- Speed – the footage is broadcast directly to me and saved, they don’t need to send me files after the fact

- Simplicity – they don’t need to worry about anything – most of them have OBS configured for streaming already

It works something like:

Pretty straightforward, pretty easy.

By default I’ve configured everything to save in 1800-second long .flv files (30 minutes) – this could mkv or otherwise, but the quality is fine enough for my non-cinema-quality productions.

It’s a convenient little service I’ve set up now for any future gaming nights as well. It’ll be great to get footage as needed and stored.

Now to find some sort of Media Asset Management system…

I’ve got several servers which I work on, and quite often, this involves running regular cron’d tasks that perform various backups and configuration updates for me at odd schedules (as an example, my Rust server wipes fortnightly, and needs a config update to change the server name to reflect the last date wiped).

To do things like this, I’ve usually just written a script in PHP and run that at a given interval (daily or otherwise). There’s no real reason I chose PHP to write these scripts aside from familiarity with the language, and no doubt the rest could be easily achieved be it through Python, Shell Script or any other language out there.

For now though, PHP serves my needs just fine.

The problem is, I don’t actually keep these scripts backed up anywhere, or organised in any sort of manner!

The age of GitLab

Over the last couple days, I’ve implemented GitLab into my homelab stack (JT-LAB), and will be using it to store most of my code as a “source of truth” and subsequently sync things to GitHub afterwards (depending on the projects of course).

To the Game Servers, Four Branches…

Based off the various server types; specific branches would be used. For now, these would be:

- Rust

- Minecraft

- Factorio

- Satisfactory

Each game would be represented in its own branch, and based off that branch, would deploy a specific set of commands as needed. For the most part, only Minecraft retains itself in persistence, and the rest rely either on a voted wipe, or scheduled wipe paradigm.

To the File Systems, Five Branches…

Then we have servers with actual file resources and assets that I’d like to keep; things like Photos, Design Assets, old code references, etc. These would be:

- Media

- Design

- Research

- Education

- Maintenance

And nine, nine branches were gifted to the Websites

I also run a number of websites for friends and family on a pro-sumer level. I won’t really list these projects, but they do total up to 9! So it all kind of fits the whole LOTR theme I was going for with these titles.

One Repo to Rule them all…

The decision to build everything into one repository to manage all the core backup operations means I have less to track; for a personal system, I think this is fine. Monolithic design probably isn’t the way to go for a much larger operation than mine though!

Announcing…

Cronjobs

So this is the hypothesized project I’d like to build over the next few days; in combination primarily with jtiong.dev which will help track the commits and such that I do. Writing these projects up here as project whitepapers on a more formal basis might help with some resume stuff going forward for my future career 🙂

With the implementation of my JT-LAB homelab; it stands to reason I should probably self-host whatever I can to try and get a decent use out of the stupid amount of money I’ve poured into the project. Being able to ensure I’m only sharing the data that I want to share as well (for whatever reason) – is pretty important to me as well.

Normally, the majority of my code to-date, has been stored on Github (which is fine, it’s fantastic and it’s an amazing free resource for the world). But my workplace had an implementation of Gitlab that I thought was done pretty well.

So, I’m going to take it upon myself to implement GitLab into JT-LAB, and make sure there is a version of my work that runs from GitLab. Github will essentially become my backup for code (Github being way more reliable in uptime than anything I’d run like GitLab etc.)

Why is this so important?

GitLab is going to work as my core repository and project management system; with it, I’ll be able to store and update my code for various projects as previously mentioned

What’s the challenge?

Well, after some wrestling it’s implemented – however the one area that I’m most unsure with, is the AutoDevOps feature of GitLab.

Lots to learn!

So it’s been several days since my last post about C States and power management in the Ryzen stuffing up Unraid OS.

I’m happy to report that things have been rock solid and for the last 90 hours or so, I’ve been solidly downloading my backups from Google Drive (yes, many years worth of data) onto the server. At the same time it’s been actively running as an RTMP bridge for all the security cameras around my house, and as an internal home network portal – all without falling over.

Here’s hoping I didn’t just jinx it….

Update: 8th August 2022 — Unraid’s been running solidly for 10 days + now!

I recently chose to go the Unraid route with my media storage server; I was lucky enough to be given a license for Unraid Pro, and straight up, let me say:

- It’s easy to use

- It’s beautiful to look at

- It’s stupid simple to get working

BUT…

My server uses an old spare desktop I had lying around:

- AMD Ryzen 7 1700 (1st generation Ryzen)

- 32GB DDR4 RAM

- B450 based motherboard

But therein lies the problem. It turns out that Ryzens crash and burn with Unraid by default. You need to go into your BIOS settings, and turn off the Global C States power management states settings. Insane.

Why am I writing about this?

Because it took me 2 weeks to reach this point, wrestling with Windows storage, wrestling with shoddy backplanes in my ancient server chassis (which I then ordered a replacement case which set me back a pretty penny); new SAS controller; new SAS cables…

This is an expensive hobby, homelabs.

Local media storage. Yeah.

That’s right, I’m running Windows 10 Pro for a home server 😂

It’s been good so far, the machine is pretty old, but it is there for running things like local media storage, maybe a few other things that aren’t GPU reliant. It has an ancient PCIe 1x GPU in it (a GT 610 haha) that can’t really do anything more than let me remote in and work on the PC.

Although I do definitely want to run:

- Core Keeper

- V Rising

On the PC for friends and family to check out 🙂

Storage is a bit interesting; I forked out for StableBit Drive Pool and StableBit Scanner (there’s a bundle you can get) and it’s a simple GUI to just click +add to expand my storage drive with whatever randomly sized hard drives I have.

Why’d I do this instead of the usual zfs or linux based solution?

Mostly to keep my options open; it’s nice seeing a GUI and if Windows can handle my needs for my local network, I’m not doing anything extremely complicated, and the “server” it’s on is going to act as a staging ground for anything pre-gdrive archive.

I could just as (probably more) easily achieve the same results doing this over something like Ubuntu Server; except for the game servers mentioned above. There are some games that just require a Windows host much better, so this is what this machine is for.

The JT-LAB rack is finally full; all the machines contained within are the servers I intend to have fully operational and working on the network! Although not all of them are turned on right now. There’s a few machines that need some hardware work done on them; but that’s a weekend project, I think 🙂

So the new Minecraft version is out, and with it I’ve created a new Vanilla server for my friends to play on.