As my Homelab and private discord community has grown, I’ve needed to roll out more than just websites, but also web applications, and differently ported things that need to be proxied back to a domain or subdomain address. The old setup was horrible… so I set about fixing it once and for all in November and December, 2022.

The Problem…

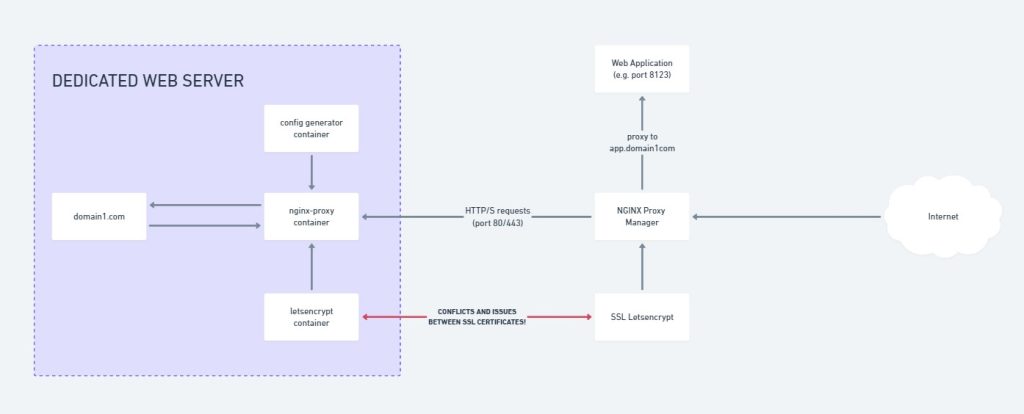

I’m stuck with something that looks like this:

This essentially means for each website I deploy, I’d need to essentially double proxy myself; and it was honestly a little bit confusing to work with SSL certificates.

How did it come to this?

One of the legacies of my time at Hostopia was building a Docker based local test environment that was portable and rapidly deployable; using an nginx-proxy container, with apache containers for the websites behind the proxy container.

The beauty of this setup was that I could quickly roll out a website as needed anywhere with the magic of Docker. And for my initial purposes, that was fine.

The problem arises when I try to roll out secondary services, like GitLab, Minecraft Maps, Game Server UIs etc. which are all related to various non standard HTTP(S) ports, but need to be reverse proxied to subdomains, etc. (an example being https://map.northrealm.info — which is a Minecraft Server Map that runs on port 8123). I’d have to have ALL THOSE RESOURCES on a single server. Or each additional server could be a double proxy to account for extra servers. This isn’t very efficient.

And secondly the bigger problem – was organising and renewing SSL certificates, it was a hassle tracking and renewing or making new certificates as needed as it was being double routed first through Nginx Proxy Manager, then secondarily on the local docker container host the app/site was located on!

So as for why it was configured like this? A mix of speed, and laziness in doing things “The right way™”. What was supposed to be quick and easy eventually just became a hassle that wasn’t working properly.

The Solution

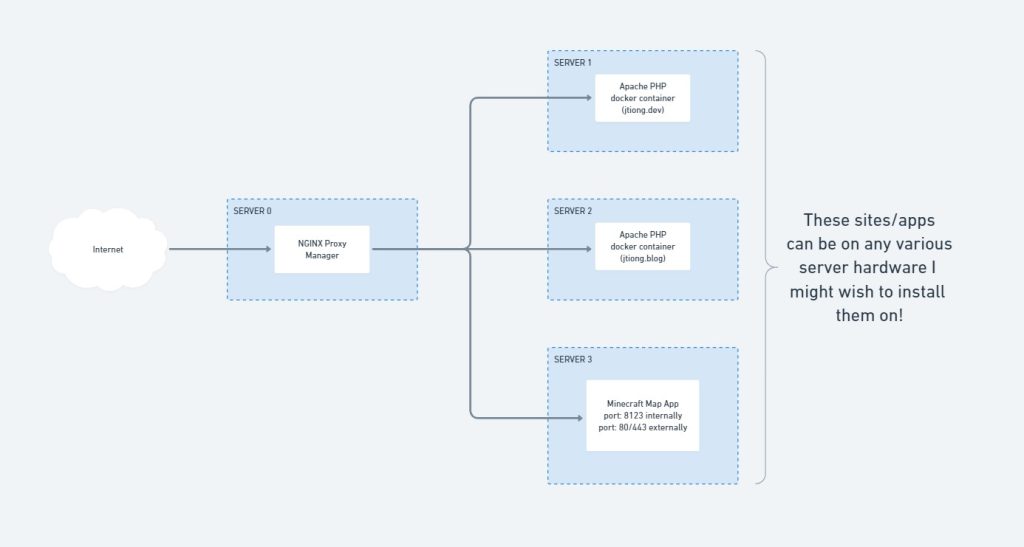

My services infrastructure now looks like this:

It might not seem like much – but it’s now server hardware agnostic, and I don’t need to install a separate cluster of containers to manage locally any sites or apps per server.

NginxProxyManager (NPM) now acts as that cluster of infrastructure containers that span the full home network as opposed to being tied down to one host. Custom nginx configurations are created “per host” in the app, and they handle how pages and content are served for sites, or direct traffic specifically for a given application.

Much better I say! 😀

*Facepalm*

There are plenty of ways to skin a cat; and this is definitely better than the original setup! It’s also not a perfect solution, but this blog post wasn’t written in an attempt to find absolute perfection (I believe it’s something to strive for, you can’t achieve it unless you’re a divine power) – and it’s more to document the journey of my ignominy and learnings as I go about running a homelab that actually gets some use 🙂

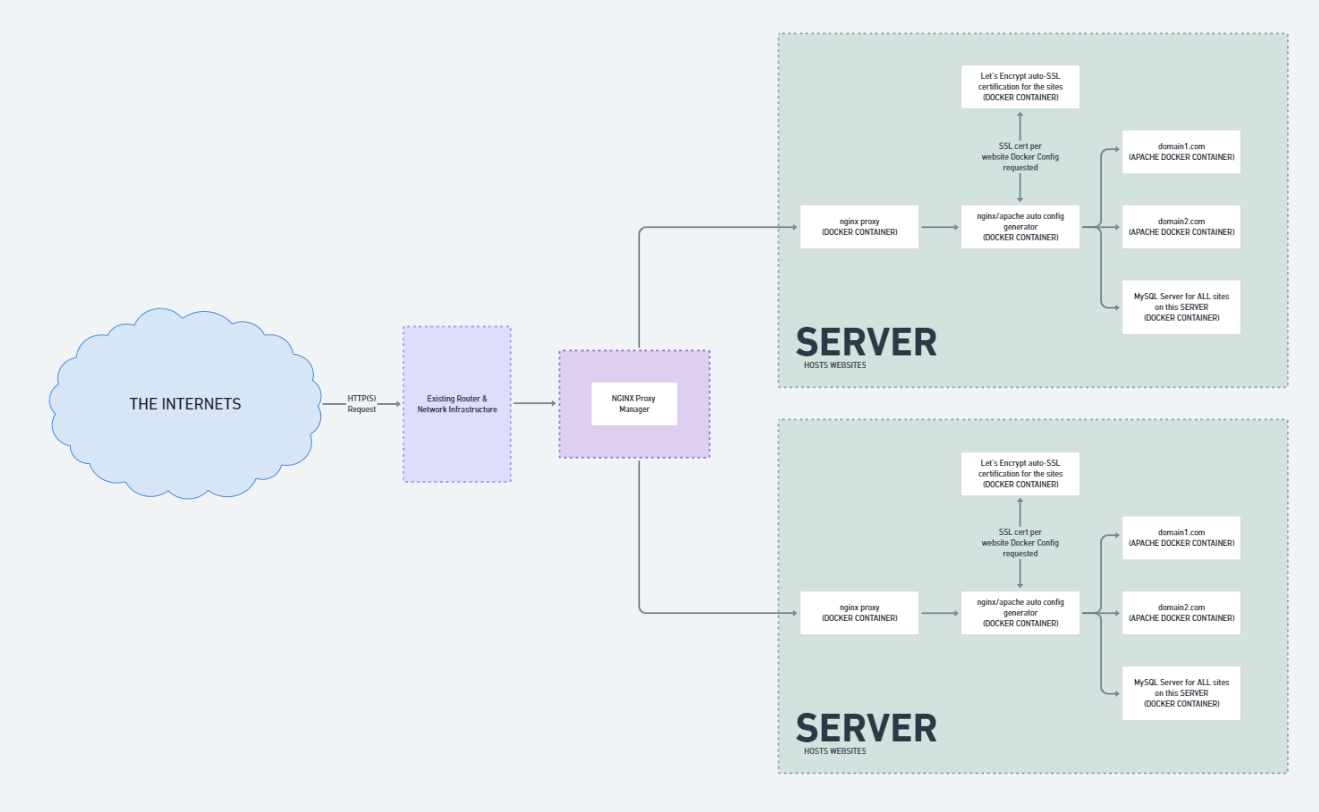

So recently with the new hardware acquisitions for the Rack, and having more resources to do things; I’ve been looking at ways to host multiple sites across multiple servers.

The properly engineered and much heavier way to do things would be to run something like a Docker Swarm, or a Proxmox HA Cluster; something that uses the high availability model and keeps things running. However, honestly, I haven’t quite reached that stage of things, or rather, I think there’s too many unknowns (to me) with what I want to do.

What I want to achieve

I want to be able to setup my servers in such a way that I have these websites running; and should the hardware fail, they’ll continue to operate by being redeployed with minimal input from me. Reducing effort and cost to keep things running. The problem I’m trying to solve is two-fold:

- I want to separate my personal projects away from the same server as my paying clients

- I’d like to get High Availability working for these paying clients

The Existing Stack

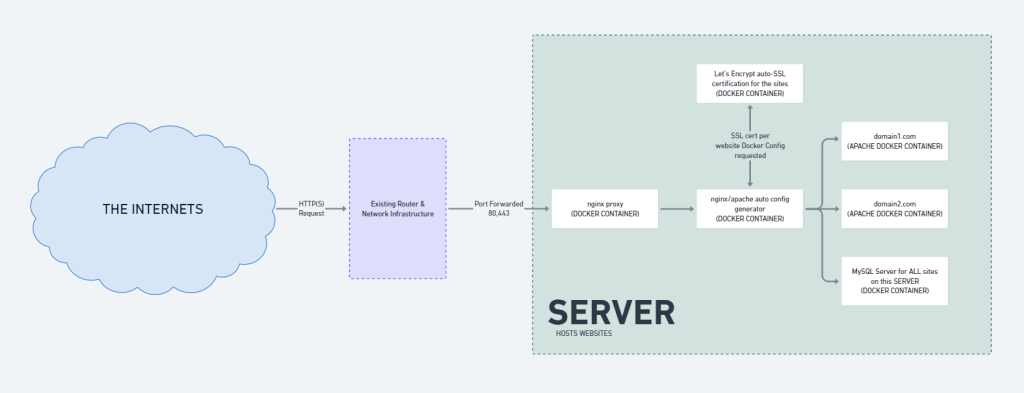

The way I served my website content out to the greater world was pretty basic. It involved a bunch of docker containers, and some local host mounts – all through Docker Compose. It looked something like this:

Overall, it’s quick, it’s simple to execute and do backups with; but it’s restricted to a single physical server. If that server were to have catastrophic hardware failure, that’d be that. My sites and services would be offline until I personally went and redeployed them onto a new server.

The “New Stack” a first step…

So what’s the dealio?

Well, my current webserver stack uses an NGINX reverse proxy to parse traffic to the appropriate website containers; but what if these containers are on MULTIPLE servers? Taking my sister’s and my personal website projects as an example:

| Sarah’s Sites (server 1) | JT’s Sites (server 2) |

| sarahtiong.com store.sarahtiong.com | jtiong.com jtiong.blog |

The above shows how the sites could be distributed across 2 different servers. The problem being, I can only route 80,443 (HTTP/HTTPS) traffic to one IP at a time. The solution?

NGINX Proxy Manager – this should be a drop-in solution on top, by installing it in a new third server, all traffic from the internet gets routed to it, and it’ll point them to the right server as needed.

Something like this:

I’m still left with some single points of failure (my Router, the NGINX Proxy Manager server) – but the workload is spread across multiple servers in terms of sites and services. Backing up files, configurations all seems relatively simple, although I’m left with a lot of snowflake situations – I can afford that. The technical debt isn’t so great as it’s a small number of servers, sites, services and configurations to manage.

So for the time being; this is the new stack I’ve rolled out to my network.

Coming soon though, the migration of everything from Docker Containers to High Availability VMs on Proxmox! Or at least, that’s the plan for now… Over Easter I’ll probably roll this out.

Since the start of the year, I’ve been working towards making the technology and capabilities of the tech I use in my every day life, a whole lot more comfortable and less cluttered.

I’ve been looking into a minimalist lifestyle after realizing whilst trying to plan on moving out – that I have way too much crap in my life to accommodate such a move.

There’s a pretty simple rule/goal I keep in mind now with each of the gadgets, tech or ideas I have:

It should, as seamlessly as possible, integrate into my everyday life and tasks. I shouldn’t have to worry about how I’m doing something, or if I can do something.

And the best way I can think of that, is to no longer be tied to a desk in order to do all the programming, design, development, gaming and media consumption.

It would enable me to have a much more enriched quality of life, being able to go out, and adventure around, and when it all gets a bit much, I can just reach through the internet and hug the comfort of my favourite IDE, or enjoy something from my personal, (and carefully) curated media collection.

I’m going to need to join The Cloud™. I’ll be calling this experiment, “Project Cloud Citizen“!

Sounds alright – and I think, very doable if you were based in North America, Western Europe, Korea, Japan, Singapore or Scandinavia. Coincidentally, friends in all those regions are the ones who talked to me about this.

It’s a way more difficult thing to achieve in Australia, where traditionally, the concept of a decent upload speed for data sharing and enrichment, hasn’t existed until the arrival of Netflix, and even then, leaders of society in Australia still think it’s just next-gen TV.

Getting away from the office desk at home

As it currently stands, I’m fortunate enough to work at an office that allows me to keep a laptop present in the office, that in theory, is connected at all times.

This laptop isn’t a snooze in terms of specs:

- Gigabyte Aero 14

- Intel i7-7700HQ

- NVIDIA GeForce GTX 1060

- Kingston 16GB (1 x 16GB)

- Gigabyte P64v7 Motherboard

- 500GB SSD (TS512GMTS800)

- Windows 10 Home

Why do this?

Reason #1

I want to be untethered from the restrictions of only being able to show friends & family games, or media that would be accessible within my home office.

I’d like to be able to develop code and access a remote system that is my own without having to carry around or go through an elaborate setup process.

Nowadays, more than ever, a combination of my iPad Pro and Samsung Note 8 cover all my usage that isn’t coding, or gaming. And even then, they begin to encroach on coding, and sometimes gaming!

Reason #2

Where I use my PC at home is an oven with my current PC setup; no joke, I run an incredibly complicated setup that I think is overkill for pretty much everybody except the most hardcore of PC gamers.

It’s messy, it’s finicky, it’s expensive as all heck and it provides the best damn gaming experience I’ve had the pleasure of using.

But in the sweltering Australian summer, it’s untenable with my neighbour’s air conditioning exhaust being about a metre away from my window, and the combined heat of my PC + 3 monitors, and consoles + TV, it becomes somewhat unhealthy, if not overly sweaty.

This is cheaper than buying air-conditioning myself

Reason #3

On a personal level, I feel like the majority of the time that I don’t want to go somewhere or spend time elsewhere outside of the haven I’ve built at home, is because I feel like I don’t have the access to my files and work to tinker with as I go along.

Coding and tinkering with various web projects has become an almost safety blanket to what I do.

The first test

Over the course of a weekend, I went ahead and did some very rudimentary testing of some functions I’d be performing.

Of course; a speed test is in order:

I’m pretty content with the speeds! My main concern was the upload speed of my laptop; which as you can see, can more than handle the 1080p streaming I was intending to do with it.

I’m surprised the USB 3.0 to Ethernet dongle I was using didn’t crap out! (cheers to my mate: Matt for providing the adapter)

Note to self though, in the future I’ll need to take photos or screenshots of my screen streaming for image quality comparisons (I know streaming will always be worse in terms of visual acuity, but by how much is worth quantifying)

Gaming

Over the weekend, I used a combination of TeamViewer, Hamachi and Steam In-Home Streaming to get a few games going. The image quality felt something akin to watching a twitch stream; there was occasional ribboning of colours in fast-moving games, but aside from that, it worked flawlessly. The almost low-spec restrictions of the laptop forced me to consider playing some of the more indie games in my backlog too.

Rocket League, Hammerwatch, Torchlight all got a go – and I have to say, the only times the frames or input stuttered were more the lack of power behind the laptop and its unoptimised configurations (they were all set to high settings etc.).

Media

Plex had a couple of movies I played to both a friend and myself (Kingsman is a great movie!) simultaneously.

The quality was superb, and stress on the laptop was more than manageable!

Productivity

Admittedly, I did this through TeamViewer, which is rubbish for such situations anyway. However, it was acceptable! There was some input lag, but that’s more TeamViewer’s crappiness as opposed to any other laptop issues. This I expect, should be resolved with proper Remote Desktop access (I’ll need to change to Windows 10 Pro).

Overall, I think the first test was a success, and it’s time to start planning a serious configuration for this application!

I’ll try to keep it well documented 😛