April and May’s been a busy time for both technically for work, and at home with JT-LAB stuff. Work’s been crazy with me working through 3 consecutive weekends to get a software release out the door, and on top of that working to some pretty crazy requests recently from clients.

I had the opportunity to partially implement a one-node version of my previous plans, and ran some personal tests with one server running as a singular node, and a similarly configured server with just docker instances.

I think I can confidently say that for my personal needs, until I get something incredibly complicated going, sticking to a dockerised format for hosting all my sites is my preferred method to go. I thought I’d write out some of the pros and cons I felt applied here:

The Pros of using HA Proxmox

- Uptime

- Security (everyone is fenced off into their own VM)

The Cons of using HA Proxmox

- Hardware requirements – I need at least 3 nodes or an odd number of nodes to maintain quorum. Otherwise I need a QDevice.

- My servers idle at something between 300 and 500 watts of power;

- this equates to approximately about $150 per quarter on my power bill, per server.

- Speed – it’s just not as responsive as I’d like, and to hop between sites to do maintenance (as I’m a one-man shop) requires me to log out and in to various VMs.

- Backup processes – I can backup the entire image. It’s not as quick as I’d hoped it to be when I backup and restore a VM in case of critical failure.

The Pros of using Docker

- Speed – it’s all on the one machine, nothing required to move between various VMs

- IP range is not eaten up by various VMs

- Containers use as much or as little as they need to operate

- Backup Processes are simple, I literally can just do a directory copy of the docker mount as I see fit

- Hardware requirements – I have the one node, which should be powerful enough to run all the sites;

- I’ve acquired newer Dell R330 servers which idle at around 90 watts of power

- this would literally cut my power bill per server down by 66% per quarter

The Cons of using Docker

- Uptime is not as guaranteed – with a single point of failure, the server going down would take down ALL sites that I host

- Security – yes I can jail users as needed; but if someone breaks out, they’ve got access to all sites and the server itself

All in all, the pros of docker kind of outweigh everything. The cons can be fairly easily mitigated; based off how fast I file copy things or can flick configurations across to another server (of which I will have some spare sitting around)

I’ve been a little bit burnt out from life over May and April, not to mention I caught COVID during the end of April into the start of May; I ended up taking a week unpaid leave, and combined with a fresh PC upgrade – so the finances have been a bit stretched in the budget.

Time to start building up that momentum again and get things rolling. Acquiring dual Dell R330 servers means I have some 1RU newer gen hardware machines to move to; freeing up some of the older hardware, and the new PC build also frees up some other resources.

Exciting Times 😂

It’s been about a week since I decided to properly up my game in terms of home services within the server Rack and convert a room in my house into the “JT-LAB”. I’ve blogged about having to learn to re-rack everything, and setting up a kind of double-nginx-proxy situation. Not to mention setting this blog up so I have a dedicated rant space instead of using my main jtiong.com domain.

As I’ve constantly wanted to keep things running with an ideal of “minimal maintenance” in mind going forward; it’s beginning to make more and more sense that I deploy a High Availability cluster. I’ve been umm’ing and ahh’ing about Docker Swarm, VMWare, and Proxmox – and I think, I’ll be settling for Proxmox’s HA cluster implementation. The price (free!) and the community size (for just searching for answers) are very convincing; so this blog post is going to be about my adventures of implementing a Proxmox HA Cluster using a few servers in the rack.

What are the benefits of going the Proxmox HA route?

Simply just high availability. I have a number of similarly spec’d out servers; forming a cluster means the uptime of the VMs (applications, sites, services) that I run is maximized. Maintenance has minimal interference with what’s running. I could power down one node, and the other nodes will take up the slack and keep the VMs running whilst I do said maintenance.

Uptime – hardware failure similarly means that I could continue running the websites I have paying customers for, with minimal concern that there’d be a prolonged downtime period.

So, that sounds great, what’s the problem?

I’m rusty. I’ve not touched Proxmox in about a decade since; and on top of that, I already actually have a node configured – but incorrectly. VMs currently use the local storage on the single cluster node to handle things; so I need to find a way to mitigate this.

The suggested way, if all the nodes have similar storage setups, is to use a ZFS mirror between all the nodes, such that they can all have access to the same files as needed. By default, Proxmox sets replication between the nodes to every 15 minutes per VM. This seems pretty excessive and would require really fast inter-connects between servers for reliable backups (10Gbit).

There’s a lot of factors to go through with this…

**is perplexed**

For some bizarre reason; WordPress has decided to start hyphenating my posts. I don’t recall it ever doing this originally when I used to use WordPress all those years ago, but it’s ridiculous now. It’s not really a great way to present readable content (at all!)

Luckily it’s also much easier nowadays than having to hack apart the style.css in the theme files editor in the Settings section.

Now, I can just customize stuff > add additional custom CSS and paste in…

.entry-content,

.entry-summary,

.widget-area .widget,

.comment {

-webkit-hyphens: none;

-moz-hyphens: none;

hyphens: none;

word-wrap: normal;

}et voila!

So recently with the new hardware acquisitions for the Rack, and having more resources to do things; I’ve been looking at ways to host multiple sites across multiple servers.

The properly engineered and much heavier way to do things would be to run something like a Docker Swarm, or a Proxmox HA Cluster; something that uses the high availability model and keeps things running. However, honestly, I haven’t quite reached that stage of things, or rather, I think there’s too many unknowns (to me) with what I want to do.

What I want to achieve

I want to be able to setup my servers in such a way that I have these websites running; and should the hardware fail, they’ll continue to operate by being redeployed with minimal input from me. Reducing effort and cost to keep things running. The problem I’m trying to solve is two-fold:

- I want to separate my personal projects away from the same server as my paying clients

- I’d like to get High Availability working for these paying clients

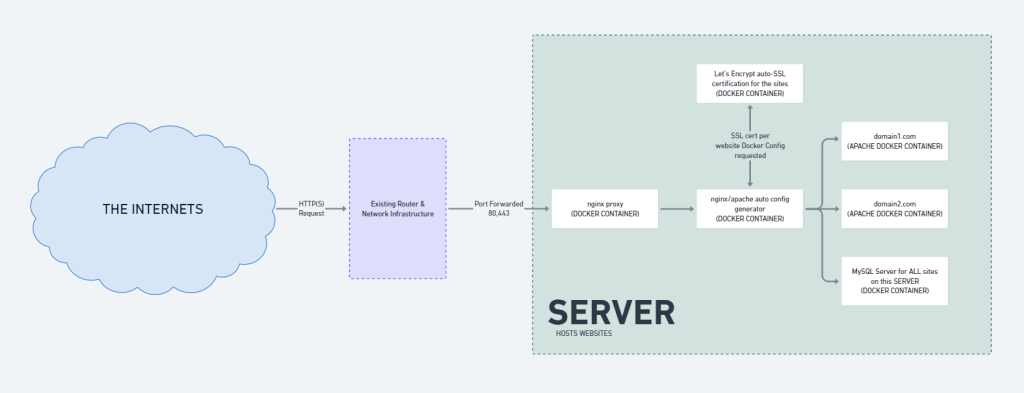

The Existing Stack

The way I served my website content out to the greater world was pretty basic. It involved a bunch of docker containers, and some local host mounts – all through Docker Compose. It looked something like this:

Overall, it’s quick, it’s simple to execute and do backups with; but it’s restricted to a single physical server. If that server were to have catastrophic hardware failure, that’d be that. My sites and services would be offline until I personally went and redeployed them onto a new server.

The “New Stack” a first step…

So what’s the dealio?

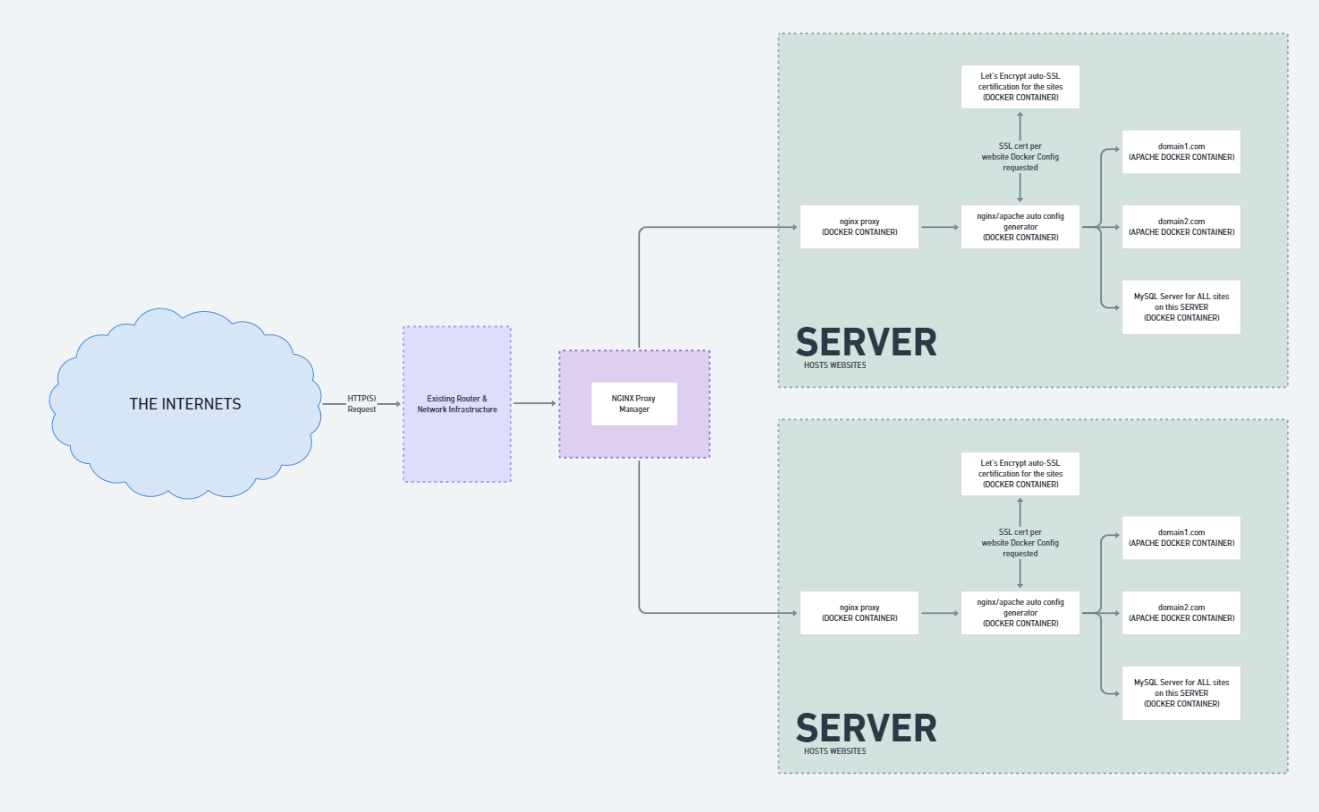

Well, my current webserver stack uses an NGINX reverse proxy to parse traffic to the appropriate website containers; but what if these containers are on MULTIPLE servers? Taking my sister’s and my personal website projects as an example:

| Sarah’s Sites (server 1) | JT’s Sites (server 2) |

| sarahtiong.com store.sarahtiong.com | jtiong.com jtiong.blog |

The above shows how the sites could be distributed across 2 different servers. The problem being, I can only route 80,443 (HTTP/HTTPS) traffic to one IP at a time. The solution?

NGINX Proxy Manager – this should be a drop-in solution on top, by installing it in a new third server, all traffic from the internet gets routed to it, and it’ll point them to the right server as needed.

Something like this:

I’m still left with some single points of failure (my Router, the NGINX Proxy Manager server) – but the workload is spread across multiple servers in terms of sites and services. Backing up files, configurations all seems relatively simple, although I’m left with a lot of snowflake situations – I can afford that. The technical debt isn’t so great as it’s a small number of servers, sites, services and configurations to manage.

So for the time being; this is the new stack I’ve rolled out to my network.

Coming soon though, the migration of everything from Docker Containers to High Availability VMs on Proxmox! Or at least, that’s the plan for now… Over Easter I’ll probably roll this out.

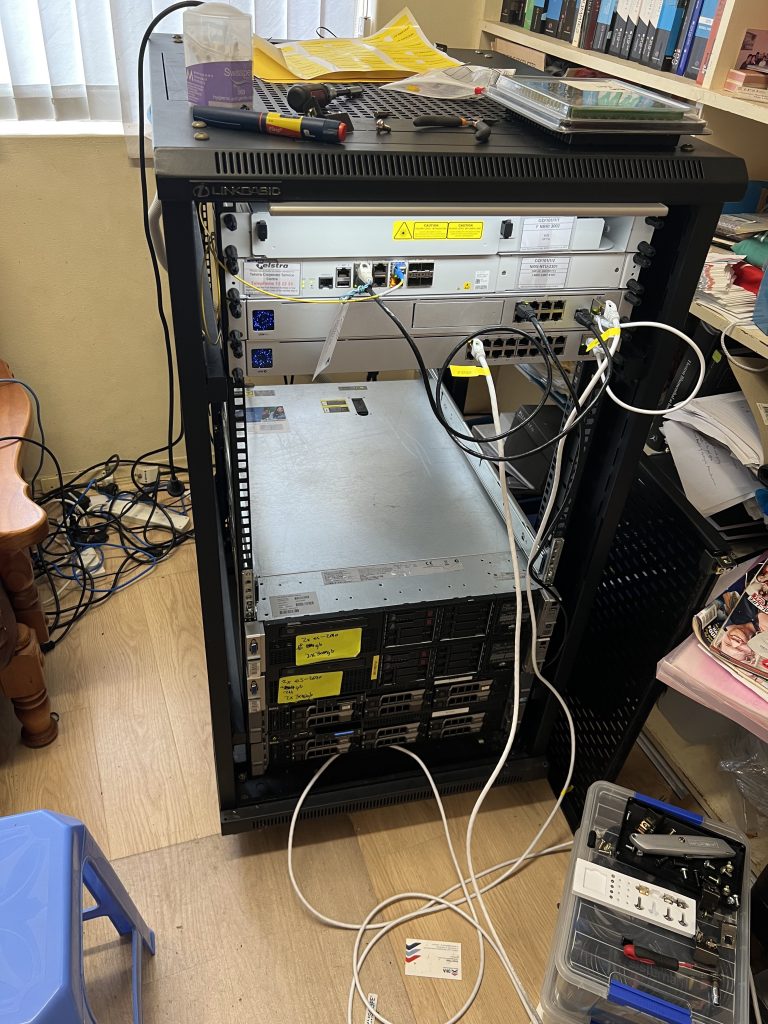

I’ve been busy for quite some time working on how to update the Server Rack into a more usable state; it’s been an expensive venture, but one that I think could work out quite well. This is admittedly just a bunch of ideas I’m having at 5am on a Friday…

I recently had the opportunity to visit my good mate, Ben – who had a surplus of server hardware. I managed to acquire from him:

- 3 x DL380p G8 servers – salvageable into 2 complete servers

- 1 x DL380p G8 server with 25 x 2.5″ HDD bays

- 1 x Dell R330 server

- 2 x Railkits for Dell R710 servers

- 3 x Railkits for the DL380p G8 servers

I spent all of Saturday and Sunday (26th and 27th) re-racking everything so that I could move the internal posts of the rack such that they’re long enough to suppot the Railkits. I couldn’t believe that I’d gone so long without touching the rack that I didn’t know about having to do that…

All-in-all, honestly, lesson learnt. What a mess…!

I’m so thankful that in a sense, I only needed to do this once. I hope…

I think, it’s quite safe to say that 2020, has been an insane year.

I didn’t do my usual post at the end of 2019; and I didn’t do much for the start of this year either with my blog. There’s a lot of reasons for it; but on both a personal level, and indeed a global level – I think 2020 is a year that my generation will remember for quite a while.

It’s a year in which, the global markets stopped, then through sheer force of will, continued on. The age of the internet and remote services & tools were forced into a level of maturity that up until now, were only something the novel few could dip their toes such waters. Indeed, my own blog post (this post you’re reading now) is all about figuring out being able to work from anywhere, at any time.

2020 brought with it, COVID-19; which brought with it – significant health risks, and significant situations to my life that honestly, I never thought I’d see.

2020 was actually going to be a rough year for me anyway, as I moved towards focusing on caring for my elderly mother. I’d moved back home, and have been deciding what to start discarding in an almost Marie Kondo-esque fugue state. My home has always been filled to the brim with old knick knacks and gadgets, not to the excess you see on Marie Kondo’s show, but still – quite impressively full of old tech, clothes, and furniture.

There’s a lot to keep track of, and I’m in the process of decluttering my life. To do this, I’ve turned to a really interesting application that my friend and colleague, Matt, has pointed me to — Notion (https://notion.so). It’s kind of an all-in-one workspace style data collection application, kind of like Evernote.

It works across iOS, PC, Linux – it’s a web based application that’s very responsive. It provides a great way to take notes and keep organised, especially in the current hellscape of things in life right now.

Most of my work nowadays, involves documenting, designing and guiding the team I’m in charge of – and similarly, the importance of documentation has started to become all the more apparent to me. For a while now, I hadn’t been able to find the right system that’d let me create something of a private knowledgebase/wiki that I liked.

Cue in, Wiki.js – a gorgeous looking Wiki package, that while still very much in development – also very much runs with how my online infrastructure is designed. It’s powered through some docker-compose configuration, it has both git and local file backup capabilities, and lets me mount my data as I see fit.

It’s not the most complete application right now – still being heavily in development, but it does some things better, and more beautifully than something like BookStack my previous Wiki of choice. And it doesn’t have the fluff of Confluence from Atlassian either.

A winner in my books.

Over the weekend, I wrote a service loading daemon for my Minecraft server that essentially allows me to interact with players to create custom, scripted actions and dynamically run commands – all tied into a Database thanks to the comfort of PHP!

I’ve aptly named the system the Minecraft Assistant Interactive Daemon (MAID for short). So far things have been working wonderfully but we’re still exploring the possibilities with this tool; from gathering player positions, to inventory security, and more! The system is based around a PHP script running in a ‘daemon’ mode that never times out, and monitors the console of the server – it reacts to things that then happen via the console (picks up commands, events, and so forth) – and updates as needed – be it via a website, database, or otherwise.

A big benefit of how I’ve implemented MAID is that I can run a Vanilla based Minecraft server, that still allows for the latest versions released by Microsoft to run – without essentially affecting functionality.

The current plan is to have:

- Custom vault control – players can protect valuables in a web-managed inventory/delivery system

- Production of materials – a “maid hiring” system, where players can pay an amount of emeralds for various maids that’ll help them acquire resources

The system is essentially a PHP based plugin wrapper on Minecraft – it doesn’t directly interface or interfere with the Minecraft client’s functionality, but instead runs as a parallel service alongside the server. So the only real drawback is a lack of real-time in-game interaction and even then, some degree of interaction can be created via the command-block system; all the features of Command Blocks are directly accessible to the MAID system.

I’m keeping it in a private repo for the time being; but in the near future I might look into releasing it 🙂

So some months back, I backed this mechanical keyboard, the Keychron K2 on Kickstarter – I’d been on the hunt at the time for a wireless keyboard that really, I wanted to use with all my personal devices. A keyboard to ‘rule them all’ for all the areas of computing I have in my life.

I had three main areas that I wanted to use the keyboard on:

- My desktop PC

- My work PC

- My PS4 at home

Aside from that, I wanted it to also be wireless, and not have me tethered to a machine – I wanted to be able to pick it up, slip it into my backpack, and carry it between the office and home. Bluetooth connectivity was a must in case I decided to use it with a number of other devices I had laying around. The Keyboard arrived on Wednesday last week – and I’ve been using it as much as I can over the last 5 days.

So far, I’ve been thrilled with the keyboard – its touch feel, the key action – the sound, and the size of it make it a very satisfying package to use as my daily driver keyboard.

Only complaint I’ve had so far:

- I hate the placement of the Page Up, Page Down, Home and End keys -they could be better arranged on the right-hand side of the keyboard. I feel that the order (from top to bottom) would be better off as Home, Page Up, Page Down, End

You can see the layout complaint I have below in the diagram of the keyboard’s layout:

Aside from that – the lighting is great, keys are easily removable and the keyboard is super maintainable! I’m a huge fan of this keyboard, and can’t stop gushing about its usability and space-saving TKL 87 key design. I barely miss the numpad (although it now means I need to rethink how I bind my keys for MMO playing!)

All-in-all though, this keyboard scores a very high 8.5/10 for me, and has taken its place in my inventory as my daily driver keyboard; for all my regular code bashing and computing.

This blog entry is more of a personal reminder – and now it’s somewhere on the internet in theory it shouldn’t ever disappear.

I do a lot of PHP related coding in my day-to-day. Be it website projects all the way through to browser-based applications, both professionally and personally – I think I’ve hit a point where VS Code – my editor of choice, is finally covering almost all my use cases.

The extensions in question:

- Alignment by annsk

- Diff by Fabio Spampinato

- Docker by Microsoft

- Format HTML in PHP by rifi2k

- GitLens by Eric Amodio

- PHP DocBlocker by Neil Brayfield

- PHP Symbols by lin yang

- phpcs by Ioannis Kappas

- Prettify JSON by Mohsen Azimi

- TabOut by Albert Romkes